Meiqi Cheng

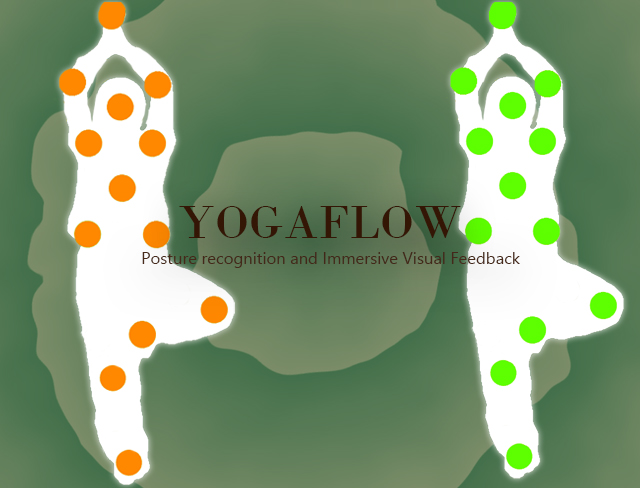

Designing a Yoga Application Based on Posture Recognition and Immersive Visual Feedback

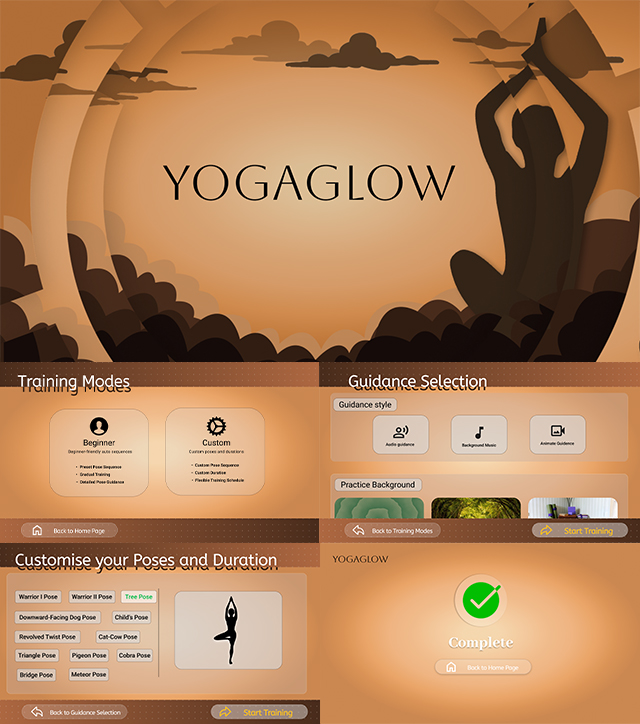

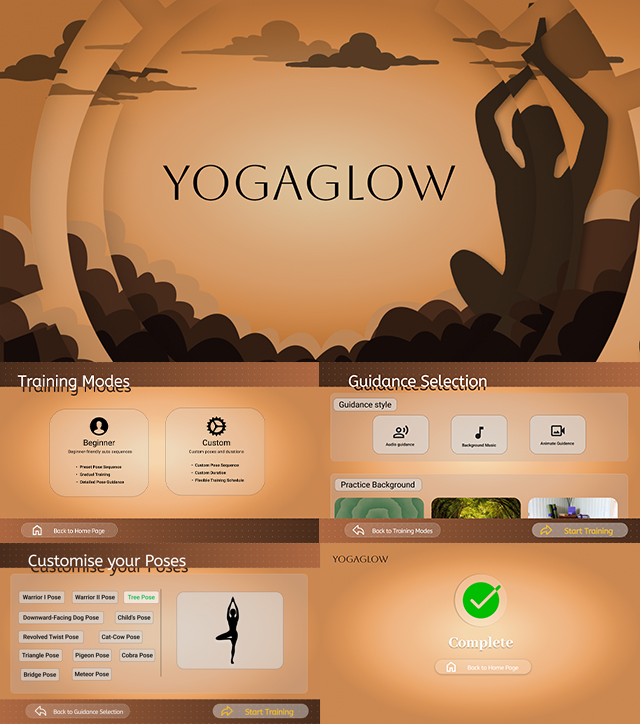

YogaGlow is a yoga application based on posture recognition and immersive visual feedback, designed to improve users’ movement accuracy and sense of immersion through technological means. The motivation for this research arises from the fact that most existing yoga applications still rely primarily on static guidance formats such as videos, images, voice instructions, or text. These approaches lack real-time perception and correction of users’ actual movements, making it difficult to effectively engage practitioners, ensure correct posture execution, and achieve true integration of body and mind. To address these issues, YogaGlow employs Kinect v2 for skeletal tracking and uses TouchDesigner to map skeletal data to nodes for visualisation, creating an interactive feedback system. During practice, the system offers feedback such as joint nodes changing colour when a posture is performed correctly, as well as breathing rhythm guidance animations. These mechanisms help users to correct their postures in real time while enhancing focus during training. Compared to conventional yoga applications, this system—combining posture recognition with visual effects—significantly improves users’ understanding of movements, engagement, and sense of immersion. This project not only aims to fill the existing gap in posture accuracy feedback for yoga applications, but also seeks to address an important question: in today’s yoga apps, which increasingly emphasise body shaping and fitness functions, is it still possible to use visual effects to distil the spiritual essence and cultural depth of yoga, and to revive the original experience of “unity of body and mind”? The goal is for YogaGlow to be more than just a fitness tool.